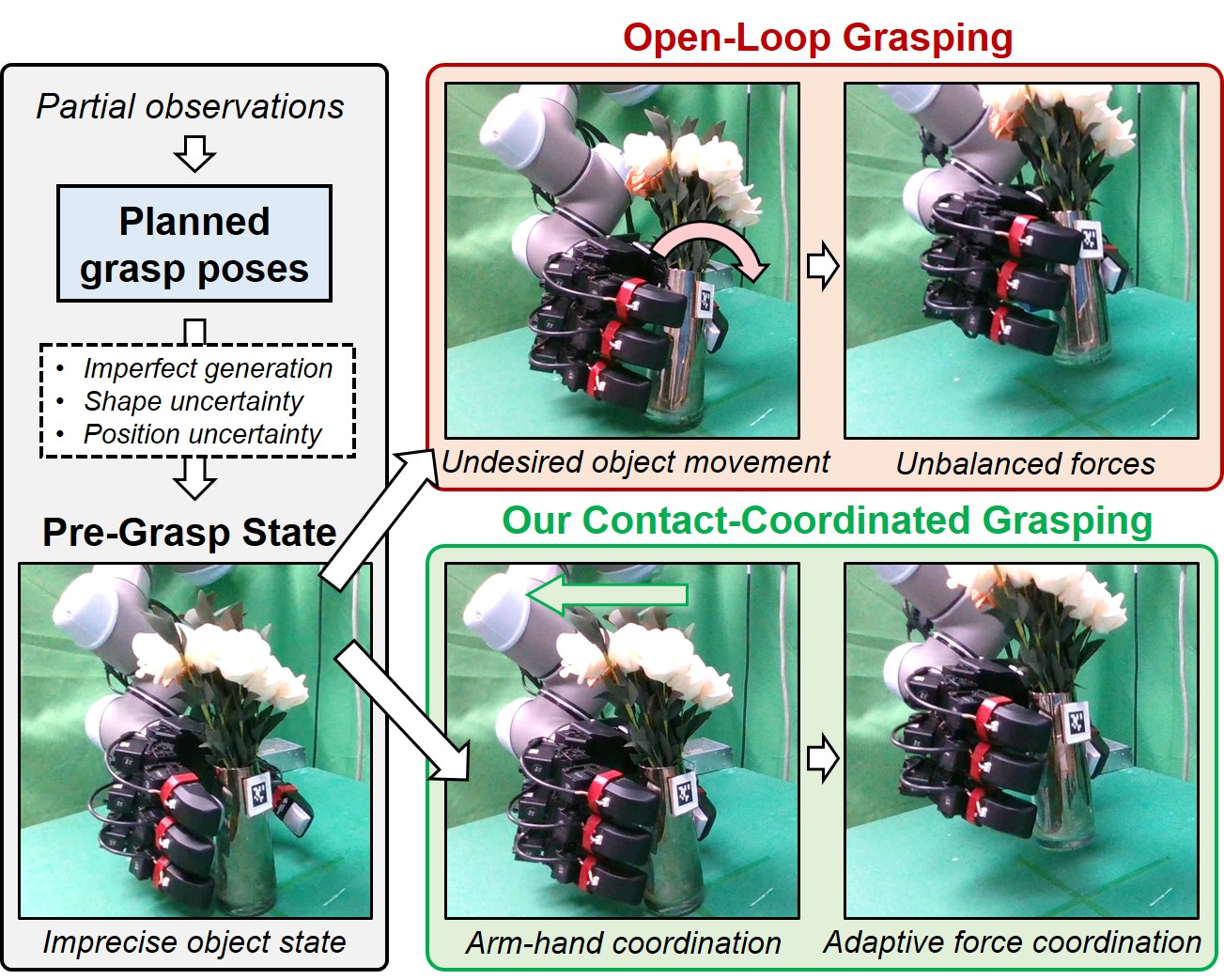

While recent research has focused heavily on dexterous grasp pose generation, less attention has been devoted to the execution of planned grasps. Under shape and position uncertainty, open-loop execution often yields uncoordinated contacts, causing undesired in-hand object motion and even grasp failures. To address this, this paper proposes a tactile-driven model predictive controller for adaptive and delicate execution of diverse dexterous grasps. Our approach emphasizes multi-contact coordination across both approaching and grasping phases, with three key novelties: (i) coordination-aware phase separation, (ii) arm-hand coordination to compensate for position errors, and (iii) adaptive force coordination to increase contact forces in a balanced manner. An analytical model is employed to relate contact forces to robot joint motions for predictive control. Our formulation imposes no restrictions on grasp types or contact configurations and integrates seamlessly with state-of-the-art grasp pose generation methods. We validate the approach through large-scale simulations involving 15k grasps across 478 objects on three robotic hands, and real-world experiments on 8 objects. Results demonstrate that our method achieves higher grasp success rates and reduced undesired object movements.

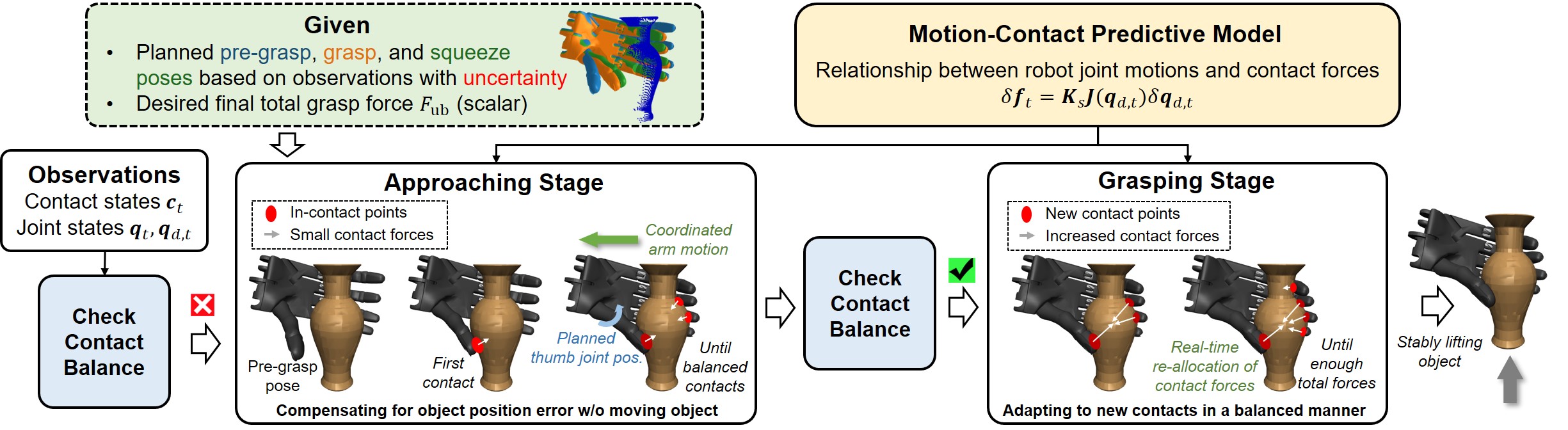

Overview of our tactile-driven coordinated contact control method for adaptive execution of planned grasp poses generated from observations with uncertainty. Our method employs a coordination-aware separation of the approaching and grasping phases, using the criteria of wrench balance. During the approaching phase, the fingers make contact with the object using gentle forces, while coordinated arm motions compensate for object position errors without deviating from the planned finger configurations. Once sufficient contacts are established, the fingers increase contact forces in a balanced manner to reach the desired total grasp force, during which the desired force of each contact is re-allocated in real time to adapt to changes in contact states.

The key contributions and novelties of our approach beyond existing methods include:

We validate the approach through large-scale simulations involving 15k grasps across 478 objects on Shadow, Allegro, and Leap Hands.

Using single-view point clouds as observations of the grasp pose generation network. Some examples achieved using our method.

Some examples of comparison with baselines. (Use the left and right buttons to switch between different cases)

The initial object positions are perturbed by 2 cm along eight uniformly distributed planar directions. Some examples achieved using our method.

Some examples of comparison with baselines.

Real-world experiments are conducted on a UR5 arm and a LEAP Hand. Each fingertip is equipped with a vision-based tactile sensor named Tac3D.

Using single-view point clouds as observations of the grasp pose generation network. (Use the left and right buttons to switch between different cases)

We further conduct experiments under large position errors, using the glass vase and mosquito repellent bottle. The object is displaced by approximately 2 cm from its original position, either towards the thumb or index finger.